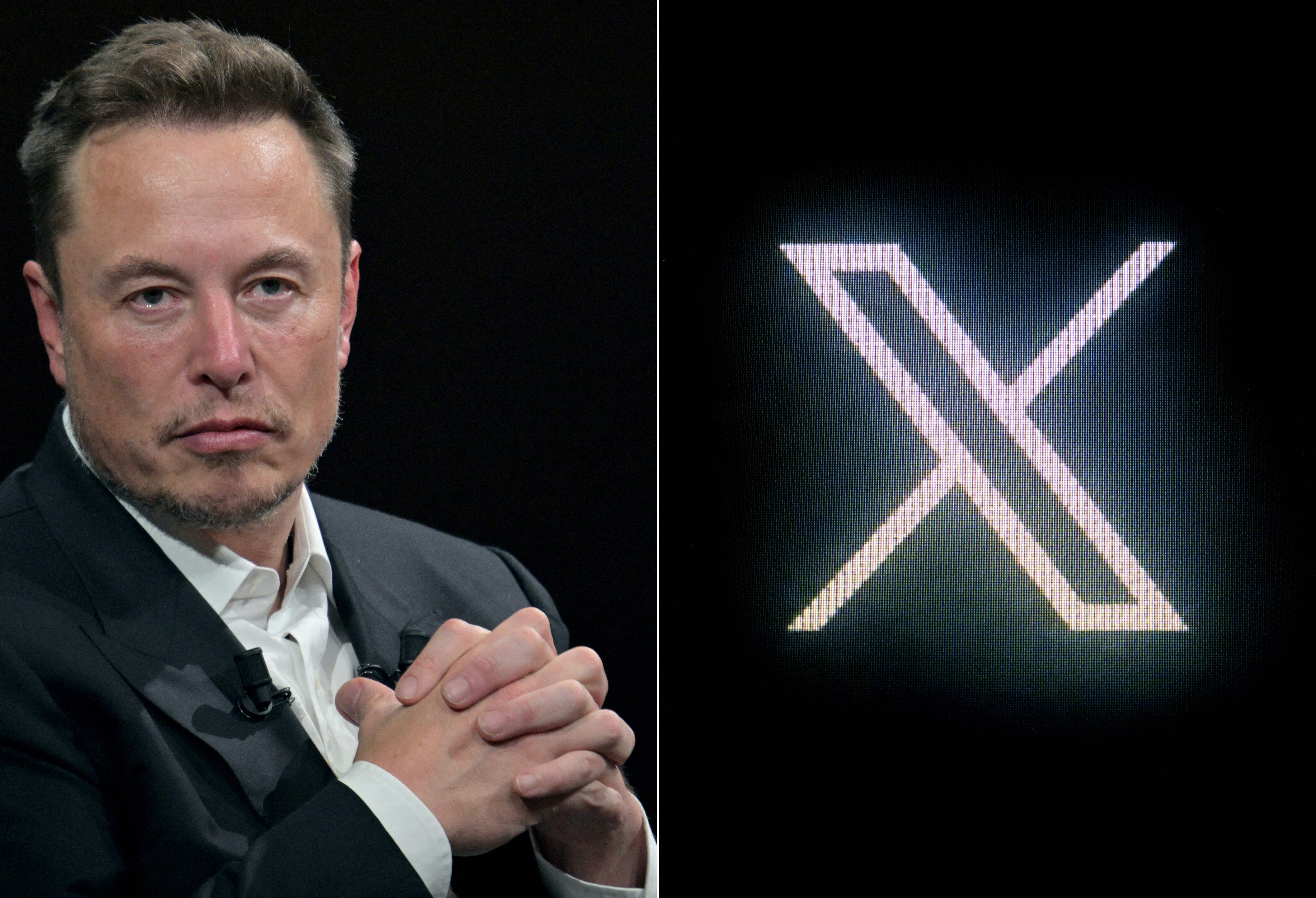

How Musk’s X fuelled racist targeting of Muslims and migrants after Southport attack

The new analysis, which investigates the algorithm of X, revealed how the platform played a “central role ında in spreading false narratives in England last summer.

The International Amnesty International has shown how the platform, which was published in March 2023, analyzed its own source code and “systematically prioritizing“ anger ”without sufficient measures to prevent damages.

The Human Rights Group said that the design of the software created a “efficient ground for the development of inflammatory racist narratives after the Southport attack last year.

On July 29, 2024, three young girls were killed by 17-year-old Axel Rudakubana in a dance lesson with Baby Da Silva Aguiar, Bebe King and Elsie Dot Stancombe-Taylor Swift and 10 other people were injured.

In the report, before the official accounts were shared by the authorities, wrong declarations and Islamophobic narratives began to circulate on social media last summer.

The results of this false information resulted in racist uprisings that lasted for weeks spreading to the country, and a number of hotel asylum seekers were targeted by the extreme right.

In the critical window after the Southport attack, the international amnesty provision said X’s algorithm system means that the inflammatory poles are viral, even if they contain false information.

The report could not find evidence that the algorithm evaluated the potential damage of the Post before it was increased on the basis of participation and allowed the spread of false information because it was not possible to share the right information.

In the report, the first participation in the first design elections, at the time, in various places in the UK and today continued to offer a serious risk of human rights, an anti -Muslim and anti -immigrant anti -immigrant violence contributed to the risk of increasing risks, “he said.

“As long as a tweet guides the interaction, it seems to have no mechanism to assess the potential of the algorithm – at least not until the sufficient user reports it.”

The report also emphasizes the prejudice of the “Premium” users in X, such as Andrew Tate, who released a video that claims to be a “coming to the boat” immigrant.

Tate was previously banned from Twitter for hate speech and harmful content, but at the end of 2023, Elon Musk was restored under “amnesty için for users suspended.

Since the seizure of the platform in 2022, Musk also dismissed content control personnel, distributed Twitter’s Trust and Security Advisory Council and fired trust and security engineers.

“Our analysis shows that the algorithmic design and policy elections of X’s algorithmic design and policy elections contribute to increasing risks due to the increasing risk of violence against a wave of violence against the varying wave of violence.

“Without effective guarantees, the likelihood of gaining traction during the increasing social tension of inflammatory or hostile tasks increases.”

An X spokesman said: ız We are determined to keep X safe for all our users. Security teams uses a combination to act proactively against the content and accounts that violate our rules, including our hateful behaviors and synthetic and manipulated media policies before affecting the safety of our platform.

“In addition, the real control of crowded -based feature community notes play an important role in supporting the work of our security teams to address the potentially misleading publications on the X platform.”